Software is to the 21st century what oil was to the 20th century. It is what drives our society forward and makes new opportunities possible. Three of the four largest companies in the world (Alphabet, Microsoft & Amazon) began their ascent by writing software. While the largest company in the world Apple built hardware and wrote software.

In addition, those involved in the software industry are some of the best rewarded in society. They often earn two, three or even four times the average salary, which puts many of them on par with doctors. Put simply, software’s importance to the modern world cannot be understated.

Where though did software come from? And more broadly, what is it? Software is a catch-all term for code, data, algorithms, programming languages, operating systems and all the other aspects of a modern computer which don’t relate to the hardware.

Programming languages began to appear in the decades after Alan Turing set out his ideas for the Turing Machine and the General Purpose Computer in 1936. The person often credited with creating the first programming language is Konrad Zuse. He described the language Plankalkül between 1942 and 1945 and used it to design a Chess Game, the first known computer game. Sadly due to the War and the post-War restrictions Zuse’s work was stymied and Plankalkül wasn’t widely adopted.

During the 1950s other programming languages began to appear, in 1951 Heinz Rutishauser published a description of his language Superplan which was inspired by Zuse’s work. Rutishauser would go on to develop ALGOL 58 which was widely adopted in the late 50s and early 60s. Other languages which appeared included the Address Programming Language, developed in the Soviet Union in 1955 by Kateryna Yushchenko. Fortran also appeared in 1957, developed by John Backus at IBM.

The above work all relates to third-generation programming languages. These are regarded as higher-level programming languages which make it easier for software developers to programme computers. They rely though on the existence of machine code and assemblers, which are classified as first and second-generation programming languages respectively. Machine code is a set of binary commands, zeros and ones, which interact with a CPU directly. Whereas assemblers convert human-readable commands and higher-level programming languages into machine code.

The first three generations of programming languages all appeared at a similar time. The two people credited with creating Assembly Language and assemblers are Kathleen Booth and David Wheeler. While working on the ARC2 project at Birkbeck in 1947 Booth defined the theory and basic implementation of Assembly Language. A year later, in 1948, David Wheeler created the first assembler while working on the EDSAC project at Cambridge University. Assembly and assemblers are still used in modern computers to manage the relationship between higher-level programming languages and machine code.

In 1945 John Von Neumann and his colleagues proposed the Von Neumann Architecture based on their work on EDVAC. It outlined the architecture for a stored-programme computer. Essentially a computer with Random Access Memory that could store a programme and execute it. One of the first computers to implement this architecture was the Manchester Baby which went into operation in 1948. The computer scientist Tom Kilburn wrote the first 17-instruction algorithm in machine code and it was executed by the Baby on June 21st 1948. This was the first time a piece of software was stored and executed on a computer.

The history of software before Turing, Zuse, Booth and Kilburn is cloudy. However, very few of the ideas which appeared in the post-Turing age were original. For instance, Ada Lovelace is often cited as the first person to write a computer algorithm. This appeared in her 1843 paper “Sketch of the Analytical Engine” which was about Charles Babbage’s Analytical Engine.

Both Lovelace and Babbage considered machines or computers in terms of symbol manipulation and software. Like Zuse, Babbage considered the idea of creating a chess game his Analytical Engine could run. He also designed a version of noughts and crosses (tic-tac-toe) and considered the idea of creating the world’s first arcade where the public would pay to play his games.

As a diversion he dabbled in games-playing machines. He came to the conclusion that any game of skill could be played by an automaton. The Analytical Engine already had several of the necessary properties — memory, ‘foresight’ and the capacity to take alternative courses of action automatically, a feature computer scientists would now call conditional branching.

Babbage and Lovelace weren’t the only people to consider how to programme a machine or analogue computer. There were many engineers and scientists working on the idea of machines driven by binary commands long before Turing and the Manchester Baby. Herman Hollerith is often credited with creating binary punch cards to power his electromechanical tabulating machines in the 1880s.

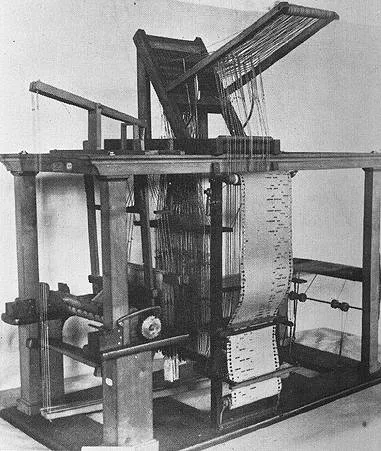

However, machine programming powered by punch cards began long before Hollerith’s tabulating machines. Babbage had considered the idea in his various engine designs. Also, Jacquard Looms, which appeared in the early 1800s, worked off the same principle to manufacture textiles with complex patterns. They ran off a long chain of binary punched cards, but even Joseph Marie Jacquard’s work wasn’t original. The idea of programming looms with punched cards or tape can be traced back to the early 1700s.

Before the 1700s some of the only tenuous references we can make to software come from mathematics and astronomy. We derive the term algorithm from the 8th-9th century Persian mathematician Muhammad ibn Musa al-Khwarizmi who was the father of algebra. And the astrolabe is a 2,000-year-old programmable tool to aid navigation and timekeeping. It could be reprogrammed/configured using metal disks which stored astronomical data.

There are many examples of intricate machines and analogue computers before the 1700s, such as clocks and the Antikythera Mechanism. However, in all these cases the machine’s behaviour and the hardware are one. The behaviour is defined by the intricate configuration of springs, cogs and gears. Software, by contrast, is based on the principle that behaviour and hardware should be separate. In essence, it is the abstraction of behaviour from hardware.

As described above this process of abstraction begins in the 1700s with the introduction of looms driven by punched tape and cards. There are then two important developments in the 1800s which prove the value and scalability of the idea. In 1804 the Jacquard Loom revolutionises the textile industry. It dramatically lowers the cost of patterned textiles and by 1836 there were 7,000 Jacquard Looms in operation in Great Britain. Later in the 1880s, Herman Hollerith used a similar principle with Electromechanical Tabulating machines to revolutionise data processing and the US census. His machines were so successful that he’d go on to found the company which would become IBM.

It was clear by the end of the 19th century the abstraction of machine behaviour was an incredibly valuable idea. But it wasn’t until Alan Turing’s work in the 1930s that the final step toward software and full abstraction began. His theoretical work on the Decision Problem led him to define the general purpose computer and many principles underlying modern software. This final step was completed by those working on the stored-programme computer in the 1940s. An idea developed by Von Neumann and his colleagues at Princeton, and implemented fully for the first time by Williams and Kilburn while working on the Manchester Baby.

The age of Software had begun, machine behaviour was fully abstracted and it enabled billions of machines to be programmed to complete almost any behaviour required. Over the past 70 years this has generated wealth on an unprecedented scale. Mega corporations have built whole industries based on millions of computers interacting with billions of people. And it was all achieved by a simple idea, that behaviour should be abstracted from the machine.

Useful Resources:

- https://hackaday.com/2018/08/21/kathleen-booth-assembling-early-computers-while-inventing-assembly/

- https://cacm.acm.org/blogs/blog-cacm/262424-why-are-there-so-many-programming-languages/fulltext

- https://www.dcs.bbk.ac.uk/about/history/

- https://www.britannica.com/technology/assembly-language

- https://www.scienceandindustrymuseum.org.uk/objects-and-stories/jacquard-loom

- https://spectrum.ieee.org/the-jacquard-loom-a-driver-of-the-industrial-revolution

- https://fetmode.fr/cvmt/metiersUS.htm